Agenta

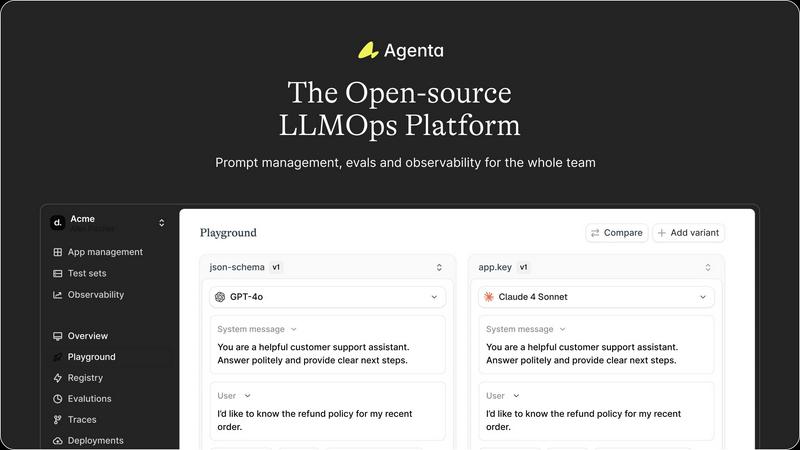

Agenta is the open-source LLMOps platform that helps teams build reliable AI applications together.

Visit

About Agenta

Agenta is an open-source LLMOps platform engineered to solve the fundamental challenge of building reliable, production-grade applications with large language models. It serves as a unified operating system for AI development teams, bridging the critical gap between experimental prototyping and stable deployment. The platform is designed for collaborative teams comprising developers, product managers, and subject matter experts who need to move beyond scattered, ad-hoc workflows. Its core value proposition lies in centralizing the entire LLM application lifecycle—from prompt experimentation and rigorous evaluation to comprehensive observability—into a single, coherent platform. By replacing guesswork with evidence-based processes, Agenta empowers organizations to systematically iterate on prompts, validate changes against automated and human evaluations, and swiftly debug issues using real production data. It is model-agnostic and framework-friendly, integrating seamlessly with popular tools like LangChain and LlamaIndex, thereby preventing vendor lock-in and providing the infrastructure necessary to implement LLMOps best practices at scale.

Features of Agenta

Unified Experimentation Playground

Agenta provides a centralized environment where teams can experiment with different prompts, parameters, and foundation models side-by-side. This model-agnostic playground allows for rapid iteration and comparison, complete with full version history for every change. Crucially, it connects experimentation directly to production data, enabling developers to debug issues by pulling real user traces directly into the testing environment, transforming any production error into a reproducible test case with a single click.

Automated Evaluation Framework

The platform replaces subjective "vibe testing" with a systematic, evidence-based evaluation system. Teams can integrate a variety of evaluators, including LLM-as-a-judge setups, built-in metrics, or custom code. Evaluations can assess not just final outputs but the entire reasoning trace of complex AI agents, providing deep insight into performance. This framework also incorporates human-in-the-loop feedback, allowing domain experts to contribute ratings and annotations directly within the workflow.

Comprehensive Observability & Tracing

Agenta offers full-stack observability by tracing every LLM request end-to-end. This capability allows teams to pinpoint exact failure points in complex chains or agentic workflows. Traces can be annotated collaboratively and are instantly convertible into test cases, closing the feedback loop between production monitoring and development. The platform also supports live, online evaluations in production to continuously monitor for performance regressions and data drift.

Cross-Functional Collaboration Hub

Designed for the entire AI product team, Agenta breaks down silos between technical and non-technical roles. It provides a safe, intuitive UI for domain experts and product managers to edit prompts, run experiments, and review evaluation results without writing code. This ensures that critical subject matter expertise is directly integrated into the development process, while maintaining full parity between UI actions and API capabilities for developer workflows.

Use Cases of Agenta

Enterprise Chatbot Development

Teams building customer support or internal knowledge base chatbots use Agenta to manage hundreds of intent-specific prompts, run A/B tests between different model providers (like GPT-4 vs. Claude), and evaluate responses for accuracy and safety before deployment. The observability features are critical for debugging hallucinated answers or chain-of-thought failures in production, ensuring reliability at scale.

Complex AI Agent Pipelines

Developers creating multi-step AI agents for tasks like data analysis, research, or content summarization leverage Agenta's trace evaluation to test each intermediate step, not just the final output. This allows for precise optimization of reasoning logic and tool use, and the collaborative playground enables rapid prototyping of different agent architectures with the entire team.

Regulatory Compliance & Audit Preparation

In regulated industries like finance or healthcare, Agenta provides the necessary audit trail for AI systems. Teams can version-control every prompt change, document the evaluation results that justified a deployment, and use the tracing system to explain any individual model decision. This creates a verifiable process for demonstrating due diligence and model governance.

Rapid Product Prototyping & Innovation

Product teams exploring new LLM-powered features use Agenta to quickly experiment with different prompting strategies and model capabilities. The shared playground allows for fast brainstorming and iteration with stakeholders, while the evaluation framework provides concrete data on which prototype performs best against key success metrics, de-risking the innovation process.

Frequently Asked Questions

Is Agenta really open-source?

Yes, Agenta is a fully open-source platform. The core codebase is publicly available on GitHub, allowing users to inspect, modify, and self-host the entire software. This open-source model ensures transparency, prevents vendor lock-in, and enables the community to contribute to its development, fostering a tool built by and for AI practitioners.

How does Agenta integrate with existing AI stacks?

Agenta is designed for seamless integration. It is model-agnostic, working with any provider's API (OpenAI, Anthropic, Cohere, etc.) and compatible with major LLM frameworks like LangChain and LlamaIndex. You can integrate Agenta's SDK into your existing application code with minimal changes, allowing you to add experimentation, evaluation, and observability capabilities without overhauling your current architecture.

Can non-technical team members really use Agenta effectively?

Absolutely. A core design principle of Agenta is to democratize the LLM development process. The platform provides an intuitive web interface where product managers, domain experts, and other non-coders can safely edit prompts in a controlled environment, launch evaluation experiments, and review results through dashboards. This bridges the gap between technical implementation and subject matter expertise.

What is the difference between offline and online evaluations?

Agenta supports both. Offline evaluations are run on static test datasets to validate changes before deployment. Online evaluations (live monitoring) run continuously on real production traffic to detect performance regressions, data drift, or new failure modes as they happen. This dual approach ensures models are validated before launch and continuously monitored afterward, covering the entire application lifecycle.

You may also like:

HookMesh

Streamline your SaaS with reliable webhook delivery, automatic retries, and a self-service customer portal.

Vidgo API

Vidgo API offers a unified platform to access diverse AI models at up to 95% lower costs than competitors, streamlini...

Ark

Ark is an AI-first email API that enables instant transactional email delivery with seamless code integration.