ggml.ai

About ggml.ai

ggml.ai is a powerful tensor library that facilitates machine learning on commodity hardware, making advanced AI accessible for all. Tailored for developers, it supports large models with features like integer quantization and automatic differentiation, enhancing both performance and usability in diverse applications.

Pricing plans for ggml.ai are not explicitly mentioned. However, the library is openly available under the MIT license, encouraging contributions from users. By engaging with the community and possibly opting for future commercial extensions, users can enhance their experience without immediate financial commitment.

The user interface of ggml.ai is designed for simplicity and efficiency, allowing developers to easily navigate and utilize its features. The layout supports a seamless browsing experience, making it accessible for both new and experienced users while promoting creative exploration within machine learning.

How ggml.ai works

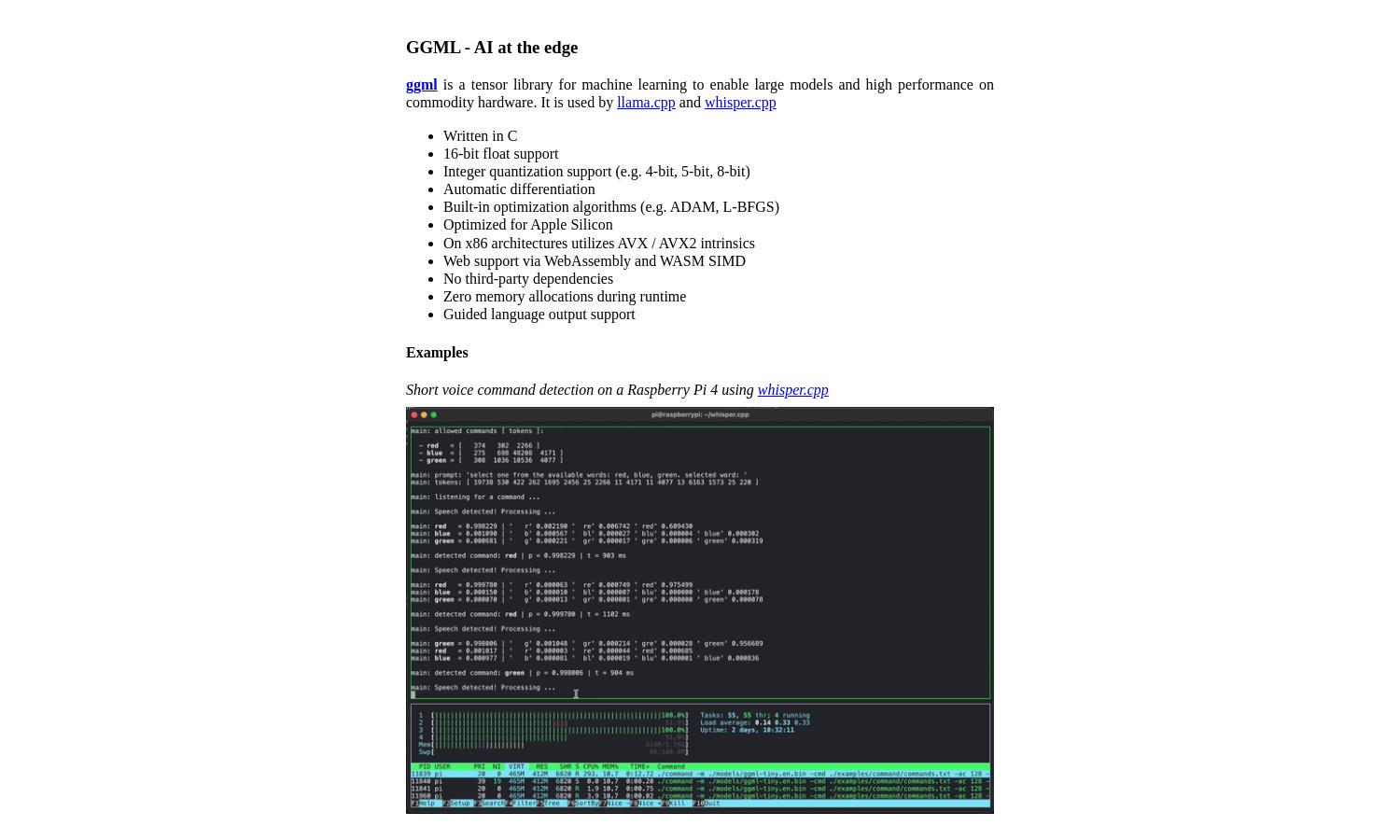

Users interact with ggml.ai by exploring its extensive documentation and accessing the library for machine learning tasks. After onboarding, they can leverage its tensor operations, integer quantization, and optimizers, ensuring high performance on various hardware. The simple setup allows for quick experimentation and efficient development.

Key Features for ggml.ai

Open-source simplicity

The open-source nature of ggml.ai sets it apart, promoting transparency and collaboration. By utilizing an MIT license, it encourages contributions from developers and fosters innovation in machine learning, ultimately empowering users to explore and enhance their capabilities without restriction.

Cross-platform support

ggml.ai excels with its broad cross-platform support, allowing users to deploy machine learning models across various operating systems like Mac, Windows, and Linux. This flexibility ensures that developers can work within their preferred environments, enhancing accessibility and usability for all user types.

High-performance inference

High-performance inference is a standout feature of ggml.ai, enabling efficient execution of complex machine learning tasks. This capability ensures that users can run large models on commodity hardware seamlessly, making advanced AI technology more achievable and practical for a wider audience.

You may also like: